12 KiB

2. Using Docker

Chapter 1 introduced what docker is, here introduce what docker does

The Docker flow: Images to containers

Learning Outcome: Create container from image

In docker, everything begin with a image.

- Docker Image: an immutable (read only) file that contains source code, libraries, dependencies, tools and all other files just needed for an application to run as a linux app.

- Also called snapshot

- Represent as an app and its virtual env at a specific point in time

- Act as a template for starting a container

- A Container is created from a image, via adding a writable layer (i.e. container layer) on top of the immutable images.

- A image can be used to create unlimited number of container, each are isolated from each other

- Modification in one container does not affect another

Command Lines:

docker runcmd start a container from a image-itrun as a interactive terminal- e.g.

docker run -it ubuntu:latest bashbashis the COMMAND that command given to the container to do after creation

docker pscmd list all running containersexitin container will terminate container

The Docker flow: Containers to images

Learning Outcome: Create image based on container (after modifying container)

After exit a container, the container is stopped but still existing

Command Lines:

docker ps -alist all containers, including stopped onesdocker ps -llist the last container These cmd is good for inspecting container conditions

Stopped container can be used to create images

Command Lines:

docker commit CONTAINER_IDcreate a image, return a sha256 string as name of imagedocker tag SHA256_IMAGE NEW_IMAGE_NAMEtag the image

Previous 2 cmd can be merged as one:

docker commit CONTAINER_NAME/ID NEW_IMAGE_NAMEcreate an image and tag it

Run processors in containers

Learning Outcome: how to run things in docker

docker run

- start a container

- give container a process, which will be the main process

- The container stops when the process stops

- Container has names. If not given, they will initiate one

Command Line:

docker run --rm -ti ubuntu:latest sleep 5--rmtell docker to remove the container after exit

docker run -it ubuntu bash -c "sleep 3; echo all done"bash -c "sleep 3; echo all done"will sleep for 3 seconds and print all done on terminal after that

docker run -d ...will detach the container in the background

docker attach

docker attach [CONTAINTER_NAME]attach the containerctl+pdetach from containerctl+qattach into most recent container

docker exec

- Starts another process in an existing container

- Great for debugging and DB administration

- Can't add ports, volumes, and so on (only through

docker run)

Command Line:

docker exec -ti CONTAINER_NAME bashrun another bash shell in the container

Manage containers

Looking at Container Output

docker logs

- Keep the output of containers, until the container is destroyed

- View with

docker logs CONTAINER_NAME - Don't let the output get too large

e.g.

docker run --name CONTAINER_NAME -d ubuntu bash c "lose /etc/password"failed asloseis typo ofless, docker logs can be used to inspect the log

Stopping and Removing Container

docker kill CONTAINER_NAME

- Killing containers (without entering it interactively and enter

exitcmd)

docker rm CONTAINER_NAME/CONTAINER_ID

- Stopped container still existing after killing it

- Remove containers

Resource Constraints

Feature of docker: fix limit of resource

- Memory limits

docker run --memory maximum-allowed-memroy image-name command

- CPU (time) limits

docker run --cpu-sharesrelative to other containers. Enforce relative % of cpu time a container can havedocker run --cpu-quotato set hard limit of cpu time

- Orchestration system describe later

- Generally requires resource limiting

Tips in practice:

- Don't let containers fetch dependencies when they start

- e.g. If upstream made change (rm library etc), it affect here

- Hence, make image include dependencies

- Don't leave important things in unnamed stopped containers. Maybe you will prune it accidentally

Exposing ports

Container Networking

- Programs in containers are isolated from Internet by default

- Containers can be connected using "private" networks, which still separated from Internet

- Host machine can expose ports to let Internet connections into container

So, private networks + expose ports makes connection

Exposing a Specific Port

- How to expose specific port: Explicitly specifies the port inside container and outside

- We can expose as many ports as you want

- Requires coordination btw containers

- Makes it easy to find exposed ports

e.g.

- Open a terminal, create a simple server in docker:

docker run --rm -ti -p 45678:45678 -p 45679:45679 --name echo-server ubuntu:14.04 bash- this docker expose docker's port 45678 to localhost's port 45678, and docker's port 45679 to local host's port 45679

- In the docker, create a simple echo server by

nc -lp 45678 | nc -lp 45679. It listen on port 45678 and send data to port 45679 via linux pipeline

- Open 2nd terminal, enter

nc localhost 45678to start a sender - Open 3rd terminal, enter

nc localhost 45679to start a listener - Enter anything in sender terminal, data will be echoed in listener terminal

Exposing Ports Dynamically

If having multiple dockers running.

- The port inside the container is fixed, as given

- Port on the host can be assigned by docker using unused port

- It allows many containers running programs with fixed internal ports

- Often used with a service discovery program (e.g. k8t)

docker port CONTAINTER_NAME:

- show port mapping

Exposing UDP Ports

Docker works with other protocol (not only tcp) like UDP

docker run -p outside-port:inside-port/protocol- e.g.

docker run -p 1234:1234/udp

nc -ulp 45678 can start nc program using udp protocol

Container networking

Connecting directly between Containers

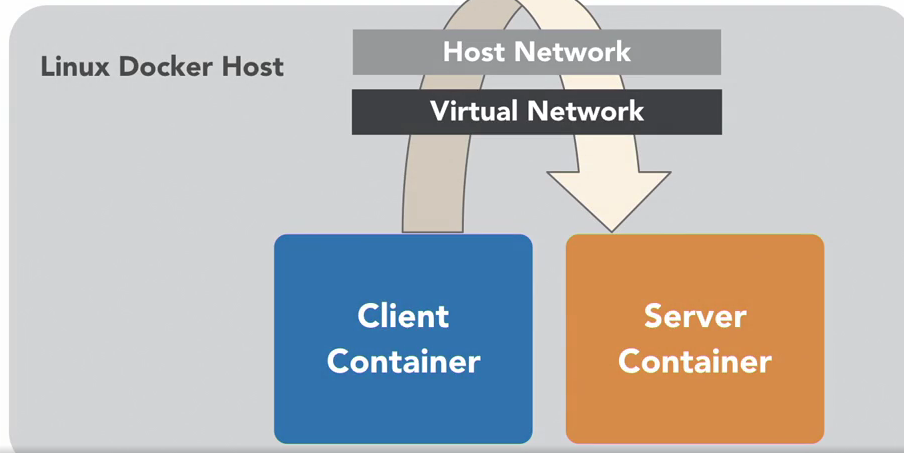

Container connection method 1: create a network that from outside of machine (i.e. expose to internet) to container

Connection established by:

Connection established by:

- Container reach out to the host level (connect to localhost)

- Then turning back to another container

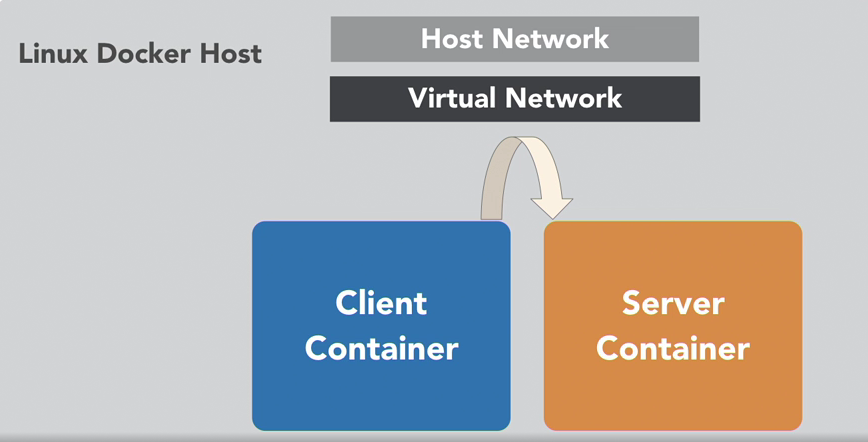

Container connection method 2; create a private network that only link between containers

docker network ls

- show established networks

bridgespecify in which, containers without network preferencehostare network in which, containers does not have network isolation (connect directly with host's network interface)nonefor containers with no networking

docker network create NETWORK_NAME

- Create a new network and return a shasum

docker run -rm -ti --net NETWORK_NAME --name CONTAINER_NAME IMAGE:TAG cmd

- Connect a container to a network

Exercise 1: 2 containers on a same network connecting each other

- Create a network named learning

docker network create learning - Create two containers connecting learning network that can ping each other

- Create a container that connected to learning network

docker run --rm -ti --network learning --name catserver ubuntu:14.04 bash - In catserver, we can ping the container itself

ping catserver - Create 2nd container "dogserver" that also connected to learning network

- In catserver, ping dogserver container

ping dogserver - In dogserver, we can ping catserver container

ping catserveras well

- Create a container that connected to learning network

- Use

ncto transfer information- In catserver,

nc dogserver 1234to connect to port 1234 of dogserver - In dogserver,

nc -lp 1234to listen incoming connection on port 1234

- In catserver,

Exercise 2: Create multiple network to manage containers separately

- Create a catonly network

docker network create catsonly - Attach catserver to catsonly network

docker network connect catsonly catserver - Create a container connect to catnet

docker run --rm -ti --net catsonly --name bobcatserver ubuntu:14.04 bash - Then, catserver and bobcatserver can communicate

- Because catserver is connected to learning network as well, so catserver can still communicate with dogserver

90% usage of docker network is up to this point, there are more options available for further research

Legacy linking

Old way of connecting containers, before network. Should be avoided, but still remain in some products.

- Links all ports, just in only one way

- Secret environment variables are shared only one way

- e.g. env var like db password, are visible to any machine that later link to it

- Reverse is not true: server cannot see env var on the machines that link

- Hence, require depends on startup order

- Restart only sometimes break the links

Exercise 1:

docker run --rm -it -e SECRET=theinternetlovescat --name catserver ubuntu:14.04 bashdocker run --rm -it --link catserver --name dogserver ubuntu:14.04 bash- in catserver

nc -lp 1234, in dog servernc catserver 1234. So dog server is linked to catserver - reverse way is not true, catserver cannot ping dogserver

- env var

SECRET=tehinternetlovescatis also copied to dogserver asCATSERVER_ENV_SECRET=theinternetlovescat. But catserver cannot see env var from dogserver

Images

Learning Outcome: learn how to manage images

Listing Images

docker images

- lists downloaded images

- SIZE of images means size of that image, but image layers maybe shared from one to another, hence real footprint is smaller

Tagging Images

- Tagging gives images names

docker commit CONATINER_ID NAME:TAGtags images for you, if not specify tag, the image is tagged as latest- Naming structure:

registry.example.com:port/organization/image-name:version-tag- You can leave out parts that don't need

Organization/image-nameis often enough

Getting images

docker pull- Run automatically by

docker run - Useful for offline work

- Run automatically by

docker pushopposite

Cleaning Up

- Images can accumulate quickly

- Remove image:

docker rmi IMAGE_NAME:TAGdocker rmi IMAGE_ID

- Write a shell script to do recursive job

Volumes

Learning Outcome: Sharing data between container and host

Volumes of a container:

- is a virtual "discs" to store and share data

- 2 main varieties

- Persistent: data are permanently stored

- Ephemeral: When no container using them, they are deleted

- Volumes are not part of images

Sharing Data with Host (Persistent)

docker run -v VOLUME_HOST_PATH:VOLUME_CONTAINER_PATH ...share the a directory in host with container (Like "Shared folder" with the host). Actions in container will affect host filesdocker run -v VOLUME_HOST_FILE_PATH:VOLUME_CONTAINER_FILE_PATH ...can sharing a "single file" into a container- Note a file must exit before start the container, otherwise it's assumed a directory

Sharing Data between Containers (Can be Ephemeral)

docker run -v volume-name

- Create a ephemeral volume

docker run --volumes-from

- Shared "dics" that exist only as long as they are being used

- Can be shared between containers

Exercise: sharing data btw containers

- Create a container with shared volume:

docker run -it -v /shared-data ubuntu:14.04 bash - Create file within the shared volume:

echo hello > /shared-data/data-file - Create 2nd container that also connect the shared volume:

docker run -it --volumes-from CONTAINER_NAME ubuntu bash - We can find the just created file:

echo /shared-data/data-file - The created directly will be inherited by 2nd container, even if 1st container exit, and inherit further

- But when all containers that shared that volume exit, this volume is gone (i.e. Ephemeral)

Docker registries

- Docker images are retrieved from & published to registries

- Registry is a program

- Registries manage and distribute images

- Docker (the company) has DockerHub for free usage

- We can run private registry

Finding Images

docker search

- search images

- Same as go to DockerHub website.

docker login

- log into DockerHub, so we can push (publish) images

Tips:

- Don't push images with password within it

- Clean up images regularly

- Be aware images, make sure they are official